作者:曾成訓(CH.Tseng)

Haar Cascade 是一款由 OpenCV 提供的物件偵測方法,操作相當簡單方便,只要引入一條稱為 XML classifiers 的資源檔,便能馬上用來偵測影像中的物件,例如臉部的偵測。

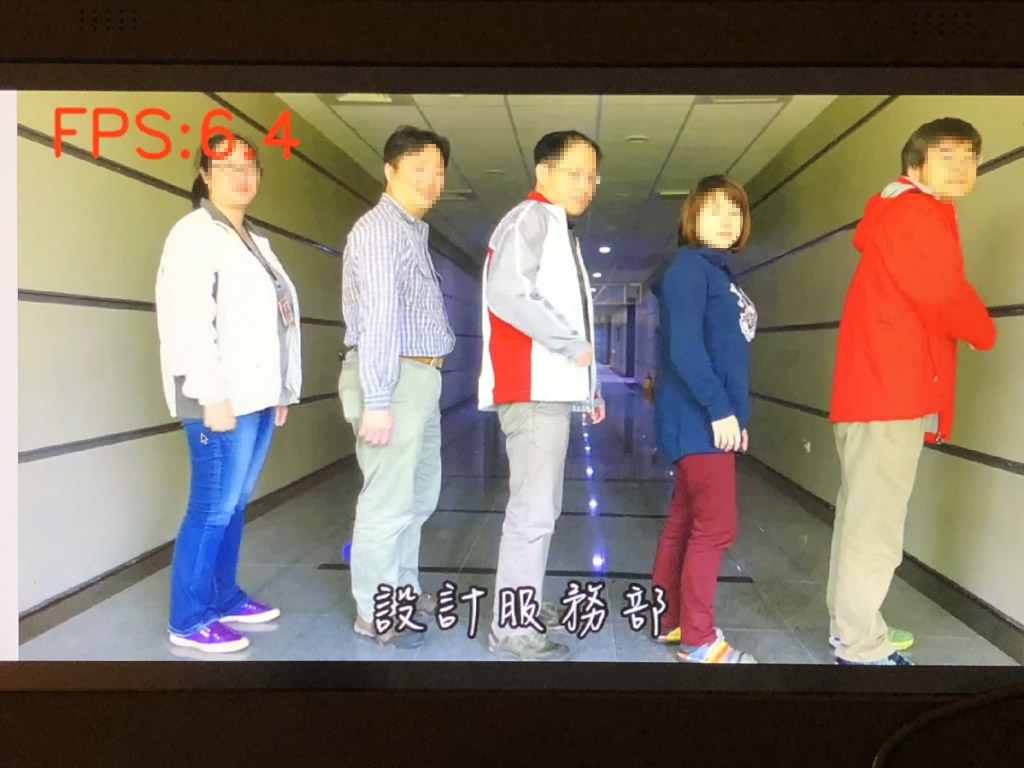

臉部偵測影響播放速度

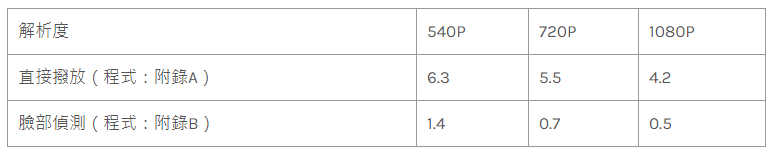

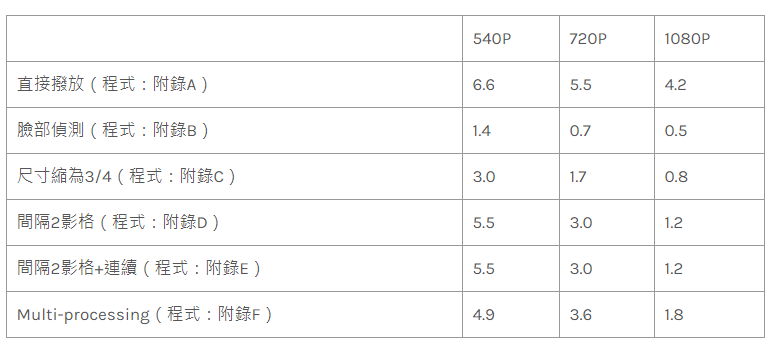

相對於深度學習的方式,Haar Cascade 雖然快速,但如果在一些資源受限的 embedded 系統或單板電腦上使用,透過 Python 執行的速度將不是很理想,下兩圖是針對不同解析度的影片,「直接撥放」與「加入臉部偵測播放」的速度比較:

- 單純撥放影片

- 撥放影片+偵測臉孔

(圖片來源:曾成訓提供)

可以發現即使透過臉部偵測速度最快的 Haar Cascade 方式,對於系統運行效率的影響仍然很大,因此如果想要減少 Haar Cascade 對於系統的負擔,讓系統運行更流暢些,可透過以下的方式來進行(下方系列步驟主要由附錄 B 程式逐步修改而來)。

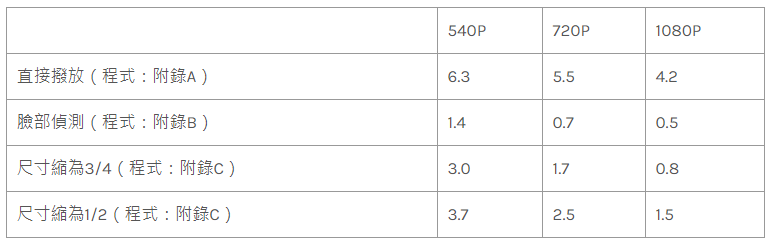

使用小尺寸的相片進行偵測

Haar Cascade 偵測尺寸愈大的相片會需要愈多的時間,因此在執行臉部偵測之前,可先將尺寸等比例縮小用於偵測臉部,得到(x, y, w, h)的 Bounding box 輸出結果後再依比例還原,如此一來影片尺寸前後保持不變,但速度卻能加快不少,測試數據如下所示:

(圖片來源:曾成訓提供)

使用此方式需注意相片尺寸縮小對於臉孔偵測效果的影響,必要時需調整 scaleFactor、minNeighbors、 minSize 等參數來維持偵測效果。

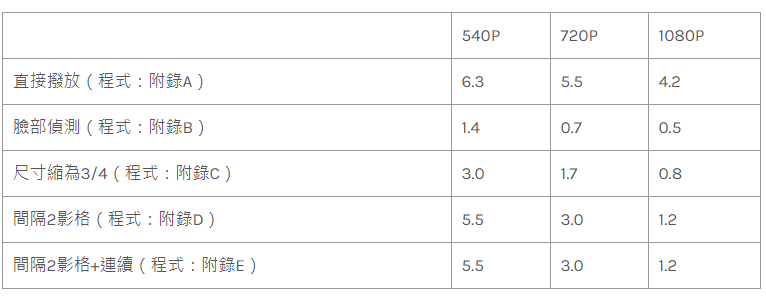

間隔數個影格再進行偵測

一般的影片速率是每秒 30 格左右,因此偵測影片中的人臉並不需要逐格檢查,只需要將數個影格再檢測一次即可。

(圖片來源:曾成訓提供)

加入了每隔一個影格才偵測一次臉部後,會發現速度又提升了不少。不過,這樣會產生一個問題,就是影像中的人臉矩形選框會由於間隔偵測關係而出現閃爍現象,使得影片看起來不是很順暢連續,所以我們加入一個功能「當該 frame 沒有作臉部偵測時,仍然讓上個 frame 矩形選框保留在影片中,持續到新的矩形選框產生為止」,如此一來能避免閃爍的問題,同時對於偵測速率也不會有影響。

(圖片來源:曾成訓提供)

使用此方式也需注意,當前後兩個 frame 的臉孔位置差異較大時(即移動速度非常快),人臉矩形選框會有跟不上的情況,因此可透過比對前後 frame 的差異度來決定是否偵測人臉而不需等到下次間隔(如 Scikit-images 的 compare_ssim)。

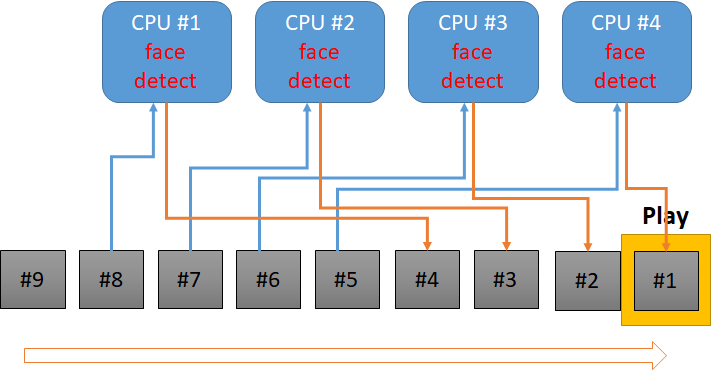

Multi-processing 平行處理

目前的 CPU大多是 muti-cores,樹莓派也不例外,共有四個核心。如果我們在程式中進行臉部偵測,其實只用到其中一個核心,其餘的並沒有利用到,這樣相當可惜,因此在下方的實驗中,我們將偵測臉部的動作,分到其餘 CPU cores 去執行後再非同步地傳回,最後顯示於影格當中。

(圖片來源:曾成訓提供)

下方附錄F範例便是使用 Python 的 multiprocessing 模組,針對臉部偵測進行平行處理,可以發現影片解析度愈大時平行處理的效率提升則會愈明顯。

(圖片來源:曾成訓提供)

相關程式附錄

A. 直接播放:

import cv2

import time

videoFile = "540p.mp4"

full_screen = True

win_name = "FRAME"

#FPS

fps = 0

start = time.time()

last_time = time.time()

last_frames = 0

def exit_app():

camera.release()

def fps_count(total_frames):

global last_time, last_frames, fps

timenow = time.time()

if(timenow - last_time)>10:

fps = (total_frames - last_frames) / (timenow - last_time)

#print("FPS: {0}".format(fps))

last_time = timenow

last_frames = total_frames

return fps

camera = cv2.VideoCapture(videoFile)

if(full_screen is True):

cv2.namedWindow(win_name, cv2.WND_PROP_FULLSCREEN)

cv2.setWindowProperty(win_name, cv2.WND_PROP_FULLSCREEN,cv2.WINDOW_FULLSCREEN)

grabbed = True

ii = 0

while(camera.isOpened()):

(grabbed, img) = camera.read()

ii += 1

img = cv2.putText(img, "FPS:{}".format(round(fps_count(ii),1)), (30, 60), cv2.FONT_HERSHEY_SIMPLEX, 2.0, (0,0,255), 3, cv2.LINE_AA)

cv2.imshow(win_name, img)

key = cv2.waitKey(1)

if(key==113):

exit_app()

B. 臉孔偵測:

import cv2

import imutils

import time

videoFile = "540p.mp4"

face_cascade = cv2.CascadeClassifier('xml/lbpcascade_frontalface.xml')

cascade_scale = 1.1

cascade_neighbors = 4

minFaceSize = (30,30)

full_screen = True

win_name = "FRAME"

#FPS

fps = 0

start = time.time()

last_time = time.time()

last_frames = 0

def exit_app():

camera.release()

def fps_count(total_frames):

global last_time, last_frames, fps

timenow = time.time()

if(timenow - last_time)>10:

fps = (total_frames - last_frames) / (timenow - last_time)

#print("FPS: {0}".format(fps))

last_time = timenow

last_frames = total_frames

return fps

def getFaces(img):

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(

gray,

scaleFactor= cascade_scale,

minNeighbors=cascade_neighbors,

minSize=minFaceSize,

flags=cv2.CASCADE_SCALE_IMAGE

)

bboxes = []

for (x,y,w,h) in faces:

if(w>minFaceSize[0] and h>minFaceSize[1]):

bboxes.append((x, y, w, h))

return bboxes

camera = cv2.VideoCapture(videoFile)

width = int(camera.get(cv2.CAP_PROP_FRAME_WIDTH)) # float

height = int(camera.get(cv2.CAP_PROP_FRAME_HEIGHT)) # float

if(full_screen is True):

cv2.namedWindow(win_name, cv2.WND_PROP_FULLSCREEN) # Create a named window

cv2.setWindowProperty(win_name, cv2.WND_PROP_FULLSCREEN,cv2.WINDOW_FULLSCREEN)

grabbed = True

ii = 0

while(camera.isOpened()):

(grabbed, img) = camera.read()

faces = getFaces(img)

for (x,y,w,h) in faces:

cv2.rectangle( img,(x,y),(x+w,y+h),(0,255,0),2)

ii += 1

img = cv2.putText(img, "FPS:{}".format(round(fps_count(ii),1)), (30, 60), cv2.FONT_HERSHEY_SIMPLEX, 2.0, (0,0,255), 3, cv2.LINE_AA)

cv2.imshow(win_name, img)

key = cv2.waitKey(1)

if(key==113):

exit_app()

C. 尺寸縮小:

import cv2

import imutils

import time

videoFile = "540p.mp4"

face_cascade = cv2.CascadeClassifier('xml/lbpcascade_frontalface.xml')

cascade_scale = 1.1

cascade_neighbors = 4

minFaceSize = (30,30)

full_screen = False

win_name = "FRAME"

#FPS

fps = 0

start = time.time()

last_time = time.time()

last_frames = 0

#--->

resize_ratio = 0.75

def exit_app():

camera.release()

def fps_count(total_frames):

global last_time, last_frames, fps

timenow = time.time()

if(timenow - last_time)>10:

fps = (total_frames - last_frames) / (timenow - last_time)

#print("FPS: {0}".format(fps))

last_time = timenow

last_frames = total_frames

return fps

def getFaces(img):

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(

gray,

scaleFactor= cascade_scale,

minNeighbors=cascade_neighbors,

minSize=minFaceSize,

flags=cv2.CASCADE_SCALE_IMAGE

)

bboxes = []

for (x,y,w,h) in faces:

if(w>minFaceSize[0] and h>minFaceSize[1]):

bboxes.append((x, y, w, h))

return bboxes

camera = cv2.VideoCapture(videoFile)

#camera.set(cv2.CAP_PROP_FRAME_WIDTH, video_size[0])

#camera.set(cv2.CAP_PROP_FRAME_HEIGHT, video_size[1])

width = int(camera.get(cv2.CAP_PROP_FRAME_WIDTH)) # float

height = int(camera.get(cv2.CAP_PROP_FRAME_HEIGHT)) # float

if(full_screen is True):

cv2.namedWindow(win_name, cv2.WND_PROP_FULLSCREEN) # Create a named window

cv2.setWindowProperty(win_name, cv2.WND_PROP_FULLSCREEN,cv2.WINDOW_FULLSCREEN)

grabbed = True

ii = 0

while(camera.isOpened()):

(grabbed, img) = camera.read()

img_org = img.copy()

img = cv2.resize(img, (int(img.shape[1]*resize_ratio), int(img.shape[0]*resize_ratio)) )

faces = getFaces(img)

for (x,y,w,h) in faces:

x = int(x / resize_ratio)

y = int(y / resize_ratio)

w = int(w / resize_ratio)

h = int(h / resize_ratio)

print(x,y,w,h)

cv2.rectangle( img_org,(x,y),(x+w,y+h),(0,255,0),2)

ii += 1

img = cv2.putText(img_org, "FPS:{}".format(round(fps_count(ii),1)), (30, 60), cv2.FONT_HERSHEY_SIMPLEX, 2.0, (0,0,255), 3, cv2.LINE_AA)

cv2.imshow(win_name, img_org)

key = cv2.waitKey(1)

if(key==113):

exit_app(

D. 間隔偵測:

import cv2

import imutils

import time

videoFile = "540p.mp4"

face_cascade = cv2.CascadeClassifier('xml/lbpcascade_frontalface.xml')

cascade_scale = 1.1

cascade_neighbors = 4

minFaceSize = (30,30)

full_screen = False

win_name = "FRAME"

interval = 5

#FPS

fps = 0

start = time.time()

last_time = time.time()

last_frames = 0

#__________________

resize_ratio = 0.75

def exit_app():

camera.release()

def fps_count(total_frames):

global last_time, last_frames, fps

timenow = time.time()

if(timenow - last_time)>10:

fps = (total_frames - last_frames) / (timenow - last_time)

last_time = timenow

last_frames = total_frames

return fps

def getFaces(img):

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(

gray,

scaleFactor= cascade_scale,

minNeighbors=cascade_neighbors,

minSize=minFaceSize,

flags=cv2.CASCADE_SCALE_IMAGE

)

bboxes = []

for (x,y,w,h) in faces:

if(w>minFaceSize[0] and h>minFaceSize[1]):

bboxes.append((x, y, w, h))

return bboxes

camera = cv2.VideoCapture(videoFile)

width = int(camera.get(cv2.CAP_PROP_FRAME_WIDTH)) # float

height = int(camera.get(cv2.CAP_PROP_FRAME_HEIGHT)) # float

if(full_screen is True):

cv2.namedWindow(win_name, cv2.WND_PROP_FULLSCREEN) # Create a named window

cv2.setWindowProperty(win_name, cv2.WND_PROP_FULLSCREEN,cv2.WINDOW_FULLSCREEN)

grabbed = True

ii = 0

while(camera.isOpened()):

(grabbed, img) = camera.read()

img_org = img.copy()

if(ii % interval == 0):

img = cv2.resize(img, (int(img.shape[1]*resize_ratio), int(img.shape[0]*resize_ratio)) )

faces = getFaces(img)

for (x,y,w,h) in faces:

x = int(x / resize_ratio)

y = int(y / resize_ratio)

w = int(w / resize_ratio)

h = int(h / resize_ratio)

cv2.rectangle( img_org,(x,y),(x+w,y+h),(0,255,0),2)

ii += 1

img = cv2.putText(img_org, "FPS:{}".format(round(fps_count(ii),1)), (30, 60), cv2.FONT_HERSHEY_SIMPLEX, 2.0, (0,0,255), 3, cv2.LINE_AA)

cv2.imshow(win_name, img_org)

key = cv2.waitKey(1)

if(key==113):

exit_app()

E. 間隔偵測(連續)

import cv2

import imutils

import time

videoFile = "540p.mp4"

face_cascade = cv2.CascadeClassifier('xml/lbpcascade_frontalface.xml')

cascade_scale = 1.1

cascade_neighbors = 4

minFaceSize = (30,30)

full_screen = False

win_name = "FRAME"

interval = 5

resize_ratio = 0.75

#FPS

fps = 0

start = time.time()

last_time = time.time()

last_frames = 0

def exit_app():

camera.release()

def fps_count(total_frames):

global last_time, last_frames, fps

timenow = time.time()

if(timenow - last_time)>10:

fps = (total_frames - last_frames) / (timenow - last_time)

last_time = timenow

last_frames = total_frames

return fps

def getFaces(img):

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(

gray,

scaleFactor= cascade_scale,

minNeighbors=cascade_neighbors,

minSize=minFaceSize,

flags=cv2.CASCADE_SCALE_IMAGE

)

bboxes = []

for (x,y,w,h) in faces:

if(w>minFaceSize[0] and h>minFaceSize[1]):

bboxes.append((x, y, w, h))

return bboxes

camera = cv2.VideoCapture(videoFile)

width = int(camera.get(cv2.CAP_PROP_FRAME_WIDTH)) # float

height = int(camera.get(cv2.CAP_PROP_FRAME_HEIGHT)) # float

if(full_screen is True):

cv2.namedWindow(win_name, cv2.WND_PROP_FULLSCREEN) # Create a named window

cv2.setWindowProperty(win_name, cv2.WND_PROP_FULLSCREEN,cv2.WINDOW_FULLSCREEN)

grabbed = True

ii = 0

while(camera.isOpened()):

(grabbed, img) = camera.read()

img_org = img.copy()

if(ii % interval == 0):

img = cv2.resize(img, (int(img.shape[1]*resize_ratio), int(img.shape[0]*resize_ratio)) )

faces = getFaces(img)

for (x,y,w,h) in faces:

x = int(x / resize_ratio)

y = int(y / resize_ratio)

w = int(w / resize_ratio)

h = int(h / resize_ratio)

cv2.rectangle( img_org,(x,y),(x+w,y+h),(0,255,0),2)

ii += 1

img = cv2.putText(img_org, "FPS:{}".format(round(fps_count(ii),1)), (30, 60), cv2.FONT_HERSHEY_SIMPLEX, 2.0, (0,0,255), 3, cv2.LINE_AA)

cv2.imshow(win_name, img_org)

key = cv2.waitKey(1)

if(key==113):

exit_app()

(本文經作者同意轉載自CH.TSENG部落格、原文連結;責任編輯:賴佩萱)

- 【模型訓練】訓練馬賽克消除器 - 2020/04/27

- 【AI模型訓練】真假分不清!訓練假臉產生器 - 2020/04/13

- 【AI防疫DIY】臉部辨識+口罩偵測+紅外線測溫 - 2020/03/23

訂閱MakerPRO知識充電報

與40000位開發者一同掌握科技創新的技術資訊!

2021/01/11

曾老師你好,想請教你Multi-processing vs threading效能差異。能否詢問登入帳號。